On Linear Algebra (er... what?)

I found an old textbook on my sheLf. Something "prompted" me to take it out and put it on the tabLe. I didn't know why I was Moved to do that—until now. But wow, what a connection!

(Opening scene, act I. In which oddly capitalized letters and quoted words in the title may matter, in the end.)

This is a post about AI: how the story finally ends up there in the end is the joy of the journey, the twist of the tale, the soul of the story.

But let’s start here: CognitiveCarbon’s lost library.

For a few difficult and dark years between 2019 and 2023, my most prized possessions—my small library of books—were stowed away in a dark and dusty garage.

Tucked away into a few dozen foldable banker’s boxes—laid away out of sight, out of mind, out of reach. This all happened after I was forced to sell the family house in mid 2019, which used to have a little office where I kept my books.

An office with a little glass desk with a keyboard and mouse that a certain cat named Pumpkin used to like to lay on while I worked. Oh, but a wonderful story about him is coming soon. My little office: a sanctuary lost.

A little library with books full of …words.

John 1:1 —

“In the beginning was the Word,

and the Word was with God,

and the Word was God."

Wait until you see, in a future post, how that thought above led me to a glimpse of where Large Language Models might eventually lead humanity.

Of course, the quote was from the book of John…which has its own special significance.

But for now, let’s stay here, in this tale that begins with books, and ends somewhere entirely different, in Cognitive Carbon’s signature style.

During those four lost years, I was living in small cracker box cottage out in the countryside near Kingsburg, CA, half of those years with my youngest daughter Julia and two dogs—and there was no room to keep my books inside.

They were stowed away through several summers of 112F heat. At that time, I didn’t have the motivation to read anyway in those dreary, post-divorce days.

I was lost in the wilderness, wandering through the dark night of the soul.

After I met Emily in 2022, she and I packed up my meager belongings in April of 2023, and we drove across country to our new home in the farmlands of Michigan.

See this post about our trip, if you haven’t already: Time Loops: Echoes of 1976 in 2023 and this one: The World has Changed since I Last Wrote to You.

That second one has surprising relevance to this current post, though I couldn’t have known it then.

As we unpacked my small trailer at an old farmhouse on the edge of a cornfield a few days after my 56th birthday, one of the things that first brought me a wave of comfort and warmth in that spring of 2023 was having my books back again within reach.

Emily knew that, and bought me a few bookshelves in advance.

Even just to see those books there when I walked by in the morning reconnected me in deep ways to the fabric of my life—revivifying spirit and memories.

A lifetime of stories, knowledge, frameworks of thought and ideas and what I call “time portals” were in that collection of books.

Some of them were kids’ books from my childhood that I read over and over; some were books my mom or dad passed down from their bookshelves or bought for me in my teens and 20’s; some were college textbooks or collections of science, history or literature that I had bought over the years.

Jack London. Mark Twain. Old dictionaries, compendiums of science, encyclopedias of mathematics, books on philosophy and physics and art.

Memories.

I use the concept of “time portal” now and then to describe certain things that, for me, connect me almost instantly to crystal clear snapshots of other times and places in my life.

Certain books are portals: wormholes to other some-whens and some-wheres.

As a child, I had a near photographic memory: so much so that my dad would rely on me to find things that he had misplaced.

He’d say: “Eric, where is the blue handled Phillips screwdriver” and I’d close my eyes, visualize the entire house, rotate it in 3D in my head this way and that; zoom in here and there; then mentally open the drawers whose contents I could literally see in my mind. Mental translations, rotations, reflections, re-scaling.

Then I’d open my eyes and tell him: “it’s in the top drawer in the kitchen, the one to the left of the stove, on the right side of the drawer in the tray next to the yellow handled scissors.”

Some possessions and books that I have kept can reawaken parts of that latent ability. As I’ve grown older, the “photographic memory” ability that I once had has faded, but now and again, some parts of that gifted ability pierce through and I can literally see, feel, and smell some place as if I were standing there.

These “time portal” objects unlock those moments.

I listened to a video with interest a few months ago about people who have extraordinary memories, and I find it fascinating that human minds have such amazing capacity.

I also read this interesting article Are memories stored outside the brain? that suggests “memory” is not just stored in neurons within our brains.

Maybe “memory” is even something that extends beyond our physical bodies: in our souls, entangled with the Akashic Records.

Anyhow…back to that “Linear Algebra” book that I was moved to take out and put on the reading table, without first understanding “why that book?”.

When I was a sophomore in college at Rose Hulman Institute of Technology around 1987 there were certain required courses I had to take.

One of them was “Linear Algebra.”

At the time that I saw that course appear on my schedule, I was naive. I had taken Calculus I and II in high school; and I had just finished three quarters of Calculus and Differential Equations among courses in physics and electrical engineering as a freshman.

And now I discovered that I had to take “Algebra” again? What the hell?

I took Algebra back in 7th grade (solving for “X”, equations of lines, graphing X-Y charts, y = mx + b and all that jazz. I bet this brings back nightmares to some of you...)

Why on Earth am I having to take “Algebra” again? I felt a pang of annoyance, but I walked over to the bookstore on campus to buy the textbooks for the coming quarter, and I bought the Linear Algebra book.

The one you see in the photo above.

I was the kind of student who would actually read the books for upcoming college courses in advance of taking the class, so I popped open the cover in the late summer before classes started, and began reading.

I soon realized that Linear Algebra was not at all what I thought. It wasn’t about lines and solving for X.

It had something with “vectors” and “matrices” (what were those?) and “dot products” and “cross products” and “diagonalization” (head scratching ensued.)

It had something to do with the mathematics of translations, rotations, reflections, re-scaling.

In the end, I did pretty well in the course and it was fun and challenging. In fact, that year, I received my second Certificate of Merit in Mathematics award from the faculty. I was a rising star at Rose Hulman, destined to graduate Magna cum Laude a few years later.

The professor was Dr. Roger Lautzenheiser, and he was a gifted teacher. Toward the end of the course, he pulled aside three or four of his top students and told us to come back a while later, just the small group of us and him. He did a special side-lecture for us because he knew we were eager learners.

Aside: I had started writing this post a few months ago, but I had left it unfinished in draft. I have 59 other articles in various stages of completion, but I’ve been working 50-70 hours a week lately trying to make ends meet, so not much time is left for writing.

But then one day a few weeks ago, someone liked one of my old posts—and that person’s last name happened to be “Lautzenheiser”.

Greetings, whoever you are! A rare last name. So I thought: “This is God’s way of telling me to finish writing this particular article.”

Over the course of a few hours during that special lecture, Dr. Lautzenheiser revealed to us that Linear Algebra was actually an important foundation for understanding huge swaths of modern mathematics; it helped connect and reveal many new facets of theoretical and applied mathematics.

I remember the feeling of awe I felt when he opened that curtain, though I can’t remember much of the details of his talk that day. I just remember the feeling: “so THAT is why Linear Algebra is so important. It helps connect all of mathematics. Wow.”

And then I went on, graduating from college and entering the workforce, and didn’t find much use for Linear Algebra in my daily work.

One day, though, I learned about a new thing while working at TRW Space and Defense called a “relational database”. It was something called SQL (pronounced “see-kwull”), and the software guys were all abuzz over what they could do with it to connect and relate data.

I shrugged my shoulders and went back to working on my hardware and chip designs; but a decade later, I would learn to use SQL myself, when I transitioned out of being a hardware designer and began writing data analytics software.

While other programmers were struggling to grasp the concepts of SQL, I quickly realized that I intuitively knew something about “left outer joins” and “right outer joins” (which are part of how databases work to interrelate tables of information) that they did not: these database constructs were analogous to the result of Linear Algebra matrix cross products and dot products!

Thanks again, Dr. Lautzenheiser.

Because of this foundation, I grasped something about how to think about and use SQL that they could not.

As time went on, I forgot about Linear Algebra again. Until the early 2000’s, when a friend who was a former colleague of mine during my supercomputing designer days ended up at this new company called “NVidia”.

They made PC computer boards back then for “computer graphics” acceleration, and the onset of PC video games made them wildly popular. I learned that inside these NVidia boards were chips called “Graphics Processing Units” or “GPUs.”

The basic idea was that game designers would represent “3D” models of the objects in the games using small triangles which had color and textures applied (they would build a “mesh” of small triangles in the shape of the game characters and objects.)

Then when the characters in the game would move through the scenery of the game, the GPUs would recalculate, really fast, where all the edges and corners of the triangles were after the movement, and then update the views, shadows, textures and lighting of the scene in the game.

It turns out those GPUs were performing specialized math really, fast—because you need to recompute the new locations of the corners and edges of all those little triangles in the ‘meshes’ that made up the game characters as things moved in the game.

And — perhaps you guess it — Linear Algebra was the math required to do those calculations. Translations, rotations, reflections, re-scaling.

So, I learned how computer graphics worked because of my understanding of the concepts of Linear Algebra. But I didn’t do computer graphics for a living, so time went on, again, and I didn’t think much about GPUs for a decade.

Then, in 2022, the world changed. I was working as a CAD designer for an irrigation systems company in Reedley, California—a job I was blessed and fortunate to have landed after being unemployed and broke during the depths of COVIDiocy in 2020 after my startup company in commercial insect rearing collapsed.

(I have done some really far out jobs; raising 3.5 million black soldier fly larvae every week is high on the list of weird ones.)

CAD, or Computer Aided Drafting, uses graphics GPUs to create the drawings, of course…

Anyhow, there were days in the late fall and early winter when there wasn’t much seasonal irrigation work to do, and I worked in an office all by myself much of the time.

So one day in late 2022, I had a browser open on my PC and I was just idly looking at Twitter and reading news for a bit.

And I stumbled across this new-fangled thing called “ChatGPT”.

I read up a bit about how it worked and played with it…and it was immediately compelling and powerfully interesting.

In those first few days, I had an idea: since ChatGPT seemed to be good at “language” but at the time, bad at math, and I had just been using a website called Wolfram Alpha that was very useful for answering mathematics questions, I wondered: what if AI researchers can somehow combine the best of both of these approaches?

I started searching to see if anyone else had already thought of merging these two things, and lo and behold: I found that someone had already written articles about it and created a few YouTube videos on the topic. That person was none other than Stephen Wolfram, who had created Mathematica and Wolfram Alpha!

That little exploration led me to read more of Stephen’s recent writings, and I stumbled across something interesting that he was working on: the Wolfram Physics project.

I won’t go into much detail about this right now, but even back in 2022 I recognized something important—if there was going to be a way for modern AI to understand everything about physics and math, eventually understanding more about the Universe than even we humans know….it would likely happen if AI became proficient at the methods in Wolfram Physics, because of their special form. But that is a deep rabbit hole story for another day.

Nonetheless, my guesses about where AI could be heading were confirmed when Elon Musk founded xAI in 2023.

From Grok:

”xAI was formed on March 9, 2023, though Elon Musk officially announced its creation on July 12, 2023, tying the date (7 + 12 + 23 = 42) to The Hitchhiker's Guide to the Galaxy and the company's mission to "understand the true nature of the universe.”

So let’s pause to translate, rotate, reflect, and rescale our thinking about this Linear Algebra topic. What does it have to do with AI?

Well…it turns out that ChatGPT and the like runs on NVidia GPU chips…derived from the ones that were originally used to generate smooth and fast computer graphics.

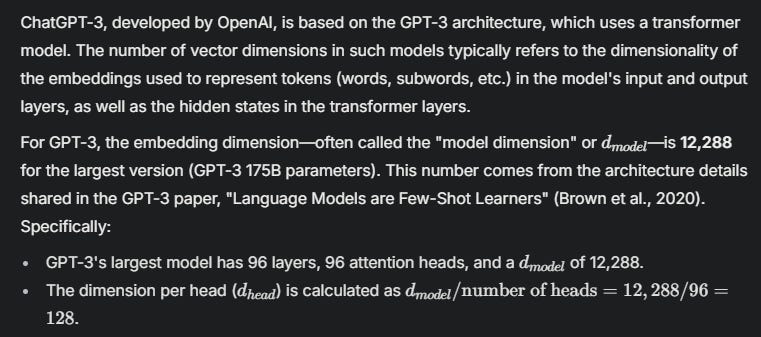

The reason is that under the hood, Large Language Models—or LLMs for short—represent “concepts” in a high-dimensional vector space. And vector manipulation is the domain of … well, of course: Linear Algebra.

12,288 Dimensions. Now that is an interesting space.

For the more intrepid explorers among you: see video 1, video 2, and video 3: LLM memory.

Little could I have known back in 1987—as a sophomore struggling to understand why I had to take a silly new course in Linear Algebra—that it would ultimately lead to machines capable of understanding language.

ALL human languages—present and past, in fact; while being able to translate between all of them, instantly, like the mythical Babelfish.

I couldn’t have foreseen that Linear Algebra would allow computers to rapidly understand vast quantities of uploaded text and then explain it in summary form using two voices that sound like podcasters talking to each other.

Like this fascinating example, about the claim that there is something massive underneath the Pyramids at Giza. Yes… those are actually two AI voices. Really.

I couldn’t have known then that Linear Algebra would lead to machines capable of generating voices with emotion, humor, cadence and inflection; that it would give rise to machines having the ability to reason, program, and “think”; that it would let them not only understand voices but also generate photos, and video in real-time—all while helping to train a new legion of humanoid robots that will eventually lead to a golden age and the end of scarcity.

AI is now beginning to grasp Fields-medal levels of super complex math, do computer programming at a level better than many PhDs (I know this with certainty, because I use AI everyday in my work developing software), understand physics and protein folding (helping to win a Nobel Prize in the process) and more.

Although just two years ago ChatGPT and its competitors were like really smart bookworm teenagers, they have rocketed upward in skill and knowledge at an incomprehensible pace.

They know how to use, and explain, Linear Algebra better than I ever did, or will, or could.

Two years ago, ChatGPT could write software like freshman in college, ranked in the top 1 million best programmers in the world on competitive programming benchmarks; and now, just a short time later, it ranks as the 75th best programmer in the world with PhD level of skill.

Later this year or next, it will be #1. And once there, it will be the best, forever.

AI is also beginning to reason at a level soon to outstrip—at first—the best human minds in each of these areas…but shortly, even outstrip the knowledge and reasoning of ALL human minds, combined.

Artificial Super Intelligence, or ASI, is coming…and it will exist because someone studied Linear Algebra, as I did, back in college during the 70’s and 80’s.

Thank you, Dr. Lautzenheiser, for opening the world to me that day, though it has taken me until age 58 to fully understand what you meant about how important Linear Algebra was to the world.

Postscript

Before I leave this AI topic today: I’m inspired to share one more point.

There is a lot of polarization around this subject; a lot of fear mongering. Is AI good? Is it bad? Will it help or destroy humanity? Is there utopia or dystopia ahead? Is “Rosie the Robot” from the Jetsons our future, or “the Terminator”?

Like any tool mankind has ever built, AI has the potential for both. In the end, it is people, and their motivations to wield such tools, that determine which path forward occurs.

I am an AI optimist: I believe that there is a potential path forward for all of humanity that can lead to a “Golden Age” where scarcity becomes nearly unknown, and work becomes optional.

You will be able to work on what you WANT to do, with meaning and purpose. You will work when and how you choose. You will no longer work solely because you have to—or die of starvation.

You will be truly free.

I further believe that God endowed humanity with a creative spark for a reason. Art, music, science … it all has a purpose. One of those purposes might be to help us help ourselves and help others to create a world free of deceit, war, pain, suffering, neglect, ignorance, hunger, isolation, and need.

A world in which we are free to live, learn, wonder, experience, love, create, and be inspired.

It is very interesting to me that the current wave of AI is built on Large Language Models: it is literally built on our use of WORDS to understand and make sense of the world and the Universe.

In the beginning…was the WORD.

I don’t think God led us down this path toward ASI without an intentional plan for us. I don’t think AI is in conflict with God’s plan, or the path to our destruction. I think it is part of God’s plan for welcoming us home.

I have more ideas to share on this thread in future posts.

Eric, you’re stirring up memories—some I had forgotten, and others I might not have even realized were there at the time. Linear Algebra is completely beyond me. Growing up and going to school in Germany, I never even had advanced Algebra.

But setting Linear Algebra aside, one thing that stands out—and always has—is your passion for math and science. I remember back when you were a senior in high school in Indianapolis. Your math teacher walked out of the classroom one day, clearly frustrated—I can’t recall exactly why. But with a Calculus test looming the next day, and the class suddenly without a teacher, you stepped up. You went to the blackboard and took over.

Even then, your teaching skills were unmistakable. You prepared your classmates for the test, and I remember being so impressed and proud. It was clear you had a gift.

I loved Linear Algebra and Group Theory (mid 1970s for me). While I couldn't see the applications then, it didn't take long as I watched computer programs evolve until office then home computers become the norm. Thanks for the inspiration to pull out my Linear Algebra book which has survived 50 years of moves and occasional purges.