Supercomputing in 2021

I helped build one of the world's fastest supercomputers back in the mid 1990's. Twenty-five years later, the fastest supercomputer on Earth that is now online in Japan is unimaginably more powerful

In the mid 1990’s, I worked at a supercomputing startup company in Pasadena that was founded by a team of brilliant PhD’s from Caltech. I was the 10th founding member (and the only one not from CalTech. I graduated Magna Cum Laude from Rose Hulman Institute of Technology.) Our company made a type of special computer called a ‘Massively Parallel Systolic Array Processing Supercomputer.” I’ll explain what that mouthful of techy terms means here in just a bit.

It was an incredibly challenging engineering project that took a team of 10 “rocket scientist” types to begin the work; the team eventually grew to over 200 employees seven years after launch. The company was eventually purchased by Celera Genomics in the year 2000, but sadly, it is no longer in existence.

The computers that we built were narrowly focused on certain types of problems; they weren’t general purpose supercomputers that could be used, for example, to forecast weather, simulate molecular chemistry, or calculate orbital trajectories of space objects.

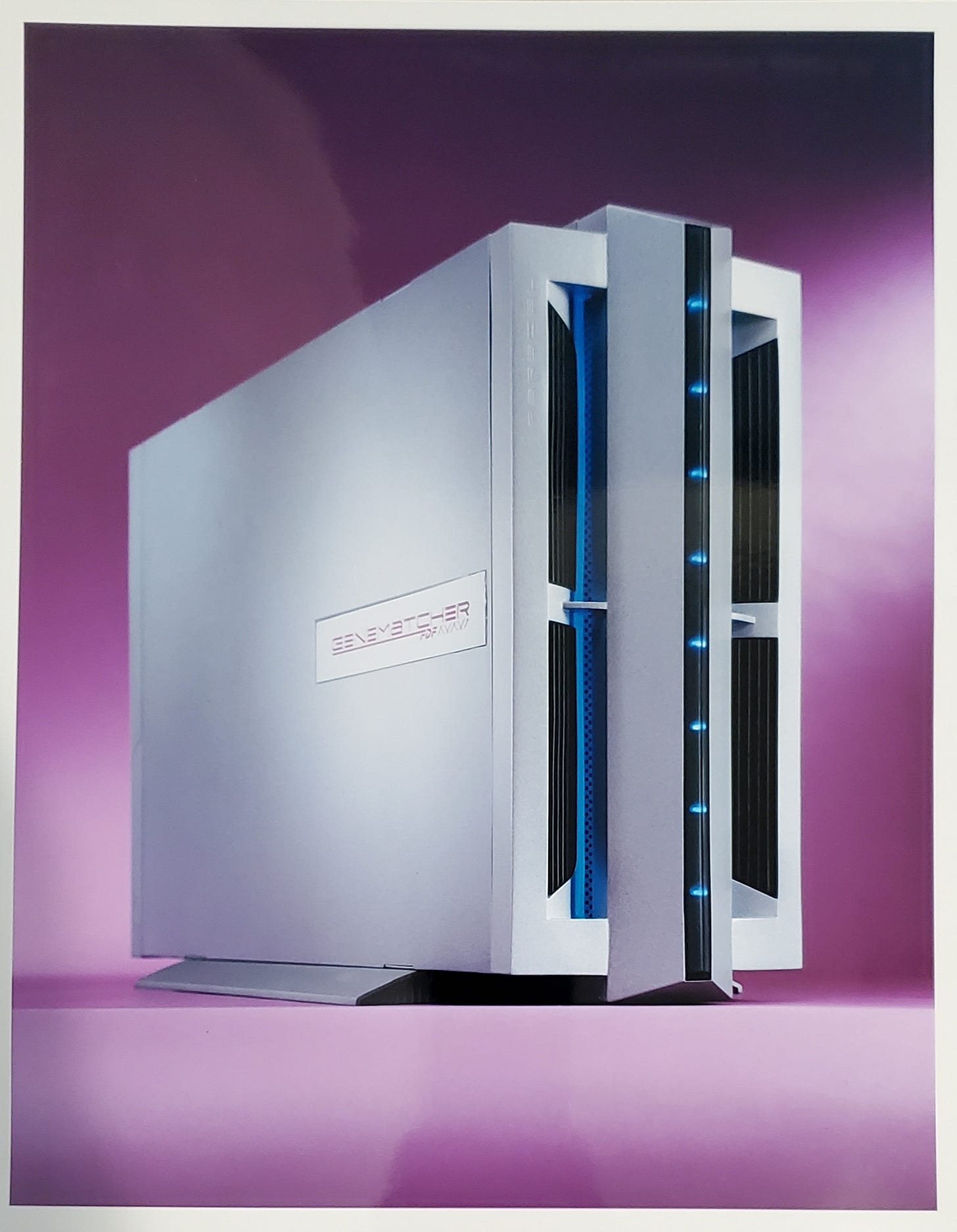

But on the particular range of problems that they were designed for, they were the best in the world, at the time. They were beautiful, powerful, fire-breathing dragons of machines. I was one of the first engineers in the world to use blue LEDs for aesthetics (they were brand new at the time, and cost about $17 a piece.)

One of our team members told me once that back in 1996 or 1997, our supercomputers held the world record for raw supercomputing compute power for a period of about five or six weeks (until some competitor raised the bar and took away the title.)

One of our customers used our equipment for signals intelligence analysis (I will leave you to guess who that might have been) and another customer used them for computations related to Human Genome Project DNA sequence analysis.

The first complete map of human DNA was completed in 1999, and the private sector company that did it back then was run by Dr. Craig Venter, then of Celera Genomics. They did it in a unique way called “shotgun sequencing” and the process heavily used PCR (yes, that PCR.)

I designed the GeneMatcher-1 “chip” that was used for a time in the computational work that was needed to determine the sequence of the three billion base pairs in the first whole human genome. The system in the photo above used 144 such chips in a long sequence.

Back then, it took years and cost hundreds of millions of dollars to sequence just one complete genome; now you can get your own DNA sequenced for hundreds of dollars, and it can be done in a matter of days.

Let’s circle back and decode the terms: A “Massively Parallel Systolic Array” means an array of “processors” that are doing work in parallel, meaning “number crunching all at the same time”; “massive” means there are lots and lots of them working all at once; and “systolic array” means that the way they do their work is analogous to a “heartbeat”.

That is, on every “heartbeat”, or clock tick, all the processors do some calculation or operation, and then on the next tick, marching in step, they all simultaneously do whatever they are supposed to do next. In our system, the processors were arranged in a “linear array”, meaning they were all arranged in a long chain; as one processor did its thing, it would take data from a downstream processor, do its operation, and hand off data to an upstream processor.

We had a “desktop” version of what we called Generation 3 which had 3,600 of our special-purpose processors inside it. It had the theoretical compute performance equal to 240 Pentium-processor based PCs of the kind that were around in 1995 (Intel had just brought Pentium CPUs to the market that ran around 300 MHz at that point in time.) Our next more powerful system had the equivalent of three “desktop” systems arranged inside a rack-mountable package.

To give you a visual analogy, let’s say that a thin brick one quarter inch thick represents the compute power of a 300 MHz Pentium based IBM PC desktop computer back in 1995.

That blue brick next to the computer screen symbolizes “one unit of computing power.”

Our Gen 3 supercomputer that was designed for signals intelligence had 3,600 processors packed into a suit-case sized package (about 19” x 7.5” x 36”)— so it wasn’t much bigger than an IBM PC of the day.

But in terms of compute power, it was a Ferrari compared to a model T Ford. That suitcase sized box could do the computational work equivalent to about 720 Pentium processor PCs. The green pile to the left of the Gen 3 is a visual equivalent to 720 stacked “bricks” of “one unit of computing power”. I included “Jake” in the visual model so that you could get a feel for the sizes of these machines. We’ll see Jake later.

The customer we sold these systems to wanted many of these computers, so we designed them such that eight of them could fit into a standard 6-foot-tall equipment rack. One “Tower” of these Gen 3 units therefore had the compute power equivalent of 5,760 Pentium based PCs.

While we were selling and installing hundreds of Gen 3 units, we were also hard at work designing the next generation. It took us about 18 months to develop the Gen 4. One of these new units, exactly the same size as the Gen 3, could do 250% more calculations in the same volume as compared to the Gen 3. That was equivalent to 1,850 Pentium based PCs.

We sold the Gen4 systems in 6-foot-tall racks as well; one of these Gen 4 Towers had the compute equivalent of 14,700 Pentium PCs. The computing industry likes to speak in terms of “operations per second” or “floating point operations per second”.

The Gen 4 tower could do about 4.4 trillion operations every second, or 4.4 “Tera - Ops”. Tera is a prefix meaning “trillion”.

I’m going to sweep the difference between “Ops” and “Flops” under the rug for now, because it’s not terribly important for this story.

Over the years, we sold the equivalent of maybe 125 of those Gen 4 towers, so the total aggregate computer power of everything we ever built over a decade was about 550 Tera Operations per second (“550 TOPS”).

Zerohedge recently profiled the world’s top supercomputers in this article. The top contender in 2021 is Japan’s Fugaku, coming in at a mind blowing 442,010 Tera FLOPS.

So how does that compare to the Gen 4 towers that I built back in 1997—the ones that held the record for fastest supercomputer for a brief time in those years?

Well, here is a graphic showing the equivalent number of Gen 4 Towers that it would take in order to match Fukagu’s performance: 99,900 of those 6-foot-tall racks that I designed and built back in 1997 would be needed to match its current performance.

In the picture below, I made a pile of 99,900 Gen 4 racks stacked next to my model dude “Jake” so that you could see the comparison visually. That’s him, the tiny ant next to a skyscraper.

This visually shows you an almost incomprehensible degree of improvement in computing power over 20 years. But here’s the thing: Fukagu is a supercomputer in Japan that is known about publicly.

Would you like to hazard a guess as to what might be hidden away in certain government agencies—systems that match or even dwarf the mighty Fukagu?

What do you suppose those things are capable of computing? Do you suppose that it is in your best interest as a citizen to not even have a clue what they are doing with all that raw compute power in dark corners of government?

Let’s not forget that Google, Apple, Facebook, Twitter and Microsoft each have the theoretical equivalents of this scale of computing, too, although it is distributed around the world in smaller chunks.

Perhaps Ray Kurzweil was correct in his prediction of when the “singularity” might occur. Any day now…

You’re - brilliant, wonderful, amazing!