Exascale computing: what can be done with that much supercomputing power?

What is a zettabyte, what is exascale supercomputing, and what could be achieved with that massive amount of computing and storage resources--by entities that the public doesn't even know about?

In this post, I’m going to introduce you to some fresh ideas about massive-scale data centers and supercomputing—like the ones used by certain three-letter agencies—and speculate about a possible use case for that enormous scale of storage and computing.

Do I know for certain what they use these massive computing resources for? No, I don’t.

What I’m going to discuss is (hopefully) just a thought exercise—one that I’ll use to teach you some important concepts—and then I’ll get to you to think about this question: are we all OK with governments around the world amassing an enormous scale of computing and storage capability without us even knowing the slightest thing about what they are using it for? Whatever that ends up being?

Why should we passively accept this?

Along the way, I’ll teach you a little bit about gigabytes and even bigger numbers; teach you a little bit about how modern data backup works; and then tie these threads together into the main topic.

Grab some coffee to wake up your brain, you’ll need it by the end.

I always start these posts with a little bit of background to set the scene and add some context. So here we go.

I wrote about modern supercomputing in a recent substack post. In the early 1990’s, I worked with a team on satellite imaging systems for a defense contractor (TRW Space and Defense) and then later at a supercomputing startup in Pasadena.

While I was at TRW, my team worked on developing the world’s first nearly real-time “Internet-based” delivery of earth-facing high resolution full color satellite photographs for NASA (the precursor to what you now see on Google Earth.)

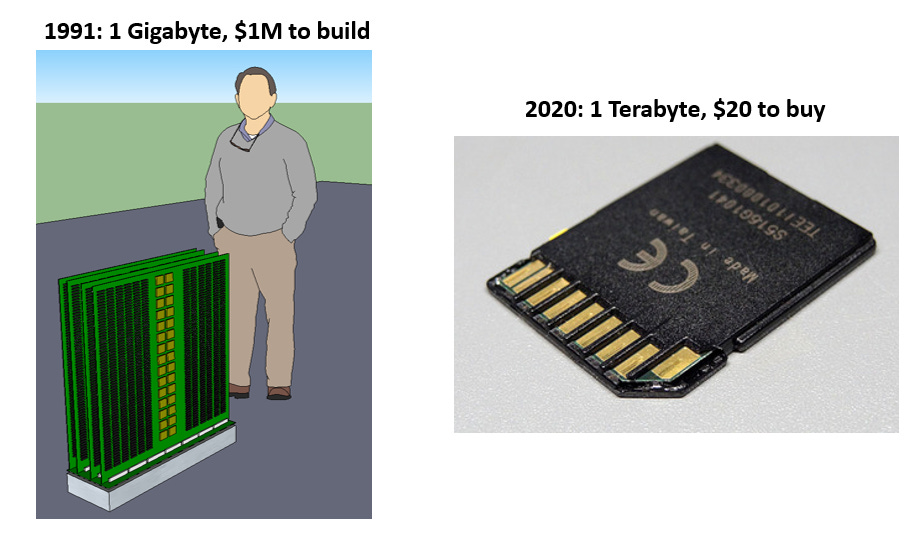

As part of that project, we had to build a 1 gigabyte memory subsystem to store the satellite images temporarily.

At that time, a 1 gigabyte memory didn’t exist; the module we designed and built was the size of a suitcase, and it took a team of about a dozen Caltech whiz kids the better part of a year to design, test and build.

It cost about $1M, all in, to build it back then. Today you can get a SD memory chip for your camera or phone that has one thousand times as much storage as that, fits in a spoon, and costs about $20.

At that time in 1990, far fewer people had computers at home, and most of the general public didn’t know what a “megabyte” or “gigabyte” was. I suspect a lot of people still don’t really know; but what they do know is when they go buy a new iPhone, they want one with 256 “gigs” or more for all their photos and music.

If you ask them what a “gig” is, they can’t say, other than “more space for my stuff.”

So lets demystify things. A “gigabyte” means one billion “bytes” of data. Three or four bytes are roughly the equivalent of what is needed to store the complete color information for one “pixel”, or tiny dot of color on a high-resolution digital photo in raw form.

If you have a fancy new iPhone, it might be able to capture 12-Megapixel photos, which means that the photo has 12 million tiny dots of color that in aggregate make up the photo; so roughly speaking, it might need (rounding things up) about 50 megabytes (50 million bytes) to store a single full-resolution photo in raw, uncompressed form. Don’t fret if this isn’t super precise; let’s just go with this for now.

A gigabyte is the term for a billion bytes (actually, gibibyte is the correct term, but we’re going to ignore that subtle distinction for now) so that’s enough storage space to store maybe 200 uncompressed photos.

If you have 20,000 photos on your phone, that might use up 100 gigabytes of memory capacity. In reality, of course, photos are “compressed” which means they actually take up less storage space than that, but we’re just trying to get a feel for sizes of things in a rough manner, so this is good enough for our purposes for now.

Just use the analogy “100 gigabytes equals 20,000 photos” as an admittedly crude rule-of-thumb, and we’ll press on.

In case you weren’t aware, the National Security Agency has been building a new data center in Utah. In the mid 1990’s, I installed supercomputers that were made by Paracel, the company I worked for back then, at a data center they have on the East Coast. So when I come across news of this kind, it gets my attention.

According to National Public Radio, the new Utah data center will have facilities that are able to house five zettabytes of data and the associated computing power to use it. What on God’s green Earth is a “zettabyte”?

The prefix “mega-” means million; “giga-” means billion; “tera-” means trillion; “peta-” means quadrillion (a thousand trillion); “exa-” means quintillion; (a million trillions); and “zetta-” means sextillion (a billion trillions.) That’s a sexy number.

Put another way: five zettabytes of storage are equal to 5 trillion gigabytes. Let’s say that the average iPhone has 128 gigabytes (some have more, some less). In that case, five zettabytes is enough storage for the equivalent of 39 billion iPhones.

Remember, we only have 7 billion people on earth…and not all of them take 20,000 high-resolution 12-megapixel photos…

Let’s look at this from another historical perspective.

As of 2009, the World Wide Web was estimated to comprise nearly half a zettabyte (ZB) of data.[5] The zebibyte (ZiB), a related unit that uses a binary prefix, represents 10247 bytes. (Wikipedia)

So on this basis, 5 zettabytes are enough to store 10 times as much as the entire World Wide Web contained in 2009. Obviously, there is much more on the web now than then; but also obviously, much of it is sheer garbage that isn’t worth preserving (TikTok, Twitter, etc). But I digress.

Anyhow… 5 zettabytes are an enormous “data lake.” So now let’s pivot a bit and talk about “backups”.

For as long as we’ve had computers, there have been some kind of long-term storage technologies to store data in when that data isn’t immediately being used to compute something.

In the dark ages of computing, data was stored on “punch cards”. If you wanted to make sure your valuable and important punch-card based data couldn’t be lost or destroyed in a fire, you could make several duplicate sets of them and store each duplicate in fireproof boxes in different places, just in case something disastrous happened to your primary data location.

Later, computers began to use magnetic tape in various formats (reels, cartridges, cassettes, etc.) but the same principle applied: if you used these things to store critically important data, you could “copy” the data stored on them to other tapes and then salt away the backup copies of the tapes somewhere for safekeeping.

As we moved forward into the personal computing (PC) era, there were things like “floppy disks” that stored data; and then later, so-called “hard drives” (which had several little rotating platters of magnetic material on them—still in use today but fading away) and then later still “solid state drives” which aren’t drives at all anymore, really, because there were no more motors and no more rotating platters, only stacks of special memory chips.

But the problem of how to keep data safe by making “backup copies” has always been with us in one form or another, no matter what technology we used to store the data.

Even as the technology to store data evolved, so did the techniques used to make backup copies. At first, people did the obvious thing: literally copy every byte from an operational system over to a backup, one byte a time.

The problem with this is that as storage capacities got bigger and bigger, it took longer and longer to do a full backup process, and while it was running, the system was tied up (you don’t want your data to be changing right in the middle of a process to back it up.)

So, a clever idea emerged: Make a full “byte by byte” copy of some original hard disk just one time; and then, every time a block of data on the disk to be protected was changed afterward— that is, whenever a block was written to with new data — send only the changed block over to the backup system, rather than having to send the whole kit and kaboodle every time a backup was called for.

After all, if you have a 100GB hard disk with photos, chances are pretty good that 99.9% of that hard drive won’t be touched or changed very often, if at all; so, there is no need to keep copying the same “stale” data every single time you do a backup. You’ve already gotten it backed up once before.

Instead, you only need to “backup” that small fraction of data that was actually new or recently changed.

The term for this is “differential backup”: it means (roughly speaking) that you only send the “differences” between the operational system and the (existing) backup copy over to the backup system. Using our photos analogy, it means “backing up” all photos just once, the very first time; and then “backing up” only new photos every so often after that, to keep the backup “synchronized”.

In this way, the length of time and resources required to ensure that you have a “faithful copy” are substantially reduced; you can get backups done much more quickly—almost as fast as the data itself is changed on your PC or phone.

Some of you may already use “cloud backup” services like BackBlaze, Carbonite, or Idrive that do this sort of thing for your Mac or PC files. Services like iCloud for Apple or OneDrive for Microsoft essentially do something similar: they look for changes in your audio, video or photo libraries on your PC or Mac and only sync those changes to your “cloud storage”. In this way, they “protect” all your favorite content by saving it to the cloud. But they do it using differential backups.

OK, now we have a basis of understanding of “differential backups” — the basic idea being that you only need to “copy” the small amount of whatever is brand new or recently changed on a device (PC or phone) to have a clean backup.

Now imagine this scenario. Suppose that you buy a brand-new iPhone at the AT&T store. Since the apps that come with it are already pre-installed, presumably there could be a “backup” image somewhere of a brand-new iPhone with all of the same base app pre-copied, exactly as it was still in the box.

A perfect clone, waiting to be updated. Until you turn on and start configuring the newly purchased phone, someone could have that ‘ghost’ version of a brand-new iPhone ready to start doing differential backups to.

Now, if I had a way to “monitor” all of the downloaded data coming into that new iPhone over the 5G or 3G or Wifi networks; if I had a way to monitor every keystroke that was made on the iPhone to create new text in messages or files; and if I had a way to capture every “photo” that was taken by the camera on that phone, then I could, in theory, keep an updated “clone” of that iPhone somewhere.

I could keep the “ghost phone” in some cloud data center, and constantly update it so that at all times, whatever the clone had on it exactly matches what is on the real iPhone in your pocket.

I could do this with relatively little resource usage (network bandwidth) if I exploited “differential backup” techniques: only sending over to the “clone” the few bits of data that were new or changed, and maybe only doing that when the phone was idle at night or something.

Hmm. I wouldn’t need to “subpoena” your phone anymore to know what was on it, would I. I’d have a complete clone of it already. You could throw it in the ocean, and somewhere there would already be a backup of it.

It turns out that Google and Apple already do much of this for you anyway: if you’ve purchased a new phone, you already know that there is some “magic” way to transfer all the stuff from your old phone to the new one from your “iCloud” or your “Google” account. To very conveniently set up your new device to exactly match your old one. It’s not much of a stretch to imagine that this could be done by other agencies besides Apple or Google (and perhaps, even with their covert assistance.)

Here’s the final piece of the puzzle. For many of us, our phones have now become an extension of ourselves, our memories. They are highly personal. Everything we do—people we call or text, photos we take, music we like, recipes we download or save, articles we write, emails we receive—all of those are stored on those phones. They are an extension of “us”.

If someone had a “ghost phone” that contained everything that was on our real-world personal phones, and also kept track of the extended network of everyone we knew and interacted with (via social media, for example) that person could construct a “digital model” of us. A simulation.

After all, they would know almost everything about you given what’s on your phone and what’s on your social media profiles. They’d know who you talk to, when, how often, and about what; where you go for work and leisure and shopping; what you take photos of; what your political leanings are; what your language and speech patterns are, and what words you use; and from all of these sources of data, including what things you buy online or at the store, have a pretty good “digital clone” representation of you in cyberspace somewhere.

What could be done with that? Well, using massive computing power in combination with all of that rich data, one could theoretically construct simulations of future events to game them out to see what might happen.

An “Exascale” computer is one that can do a quintillion calculations per second and might be useful for these kinds of simulations. Exascale is only one order of magnitude greater than the Fugaku supercomputer mentioned here.

For example: given your political leanings, age and inferred behavioral psychology—and your susceptibility, or not, to mass formation hypnosis—someone could figure out things like: will you comply with vaccine mandates? Who will you vote for this year, and will you vote in person or by mail? Will you stay locked down, or stray outside? Wear a mask, or not?

If there is some emergency, are you the kind who will follow directions, or decamp to the hills? If you are predisposed to comply, then who do you routinely interact with, and what sort of influence do you have on them? Can you be used to influence them?

One could infer your influence power by looking at your “digital clone” in this cyber-world cloud, examining your reach and connecteness on social media, and comparing your data clone to the digital clones of people you interact with.

From your photos, comments, likes, and shopping preferences, and from your social media “follows and followers” one could start to detect who leads whom into new ideas—i.e., figure out who is likely to influence who, and who follows who.

If you are the type who won’t readily comply with directives and mandates, are there people in your network—family, friends, colleagues—who could persuade you to change your behavior? Can those people be “leveraged” to get you to “behave”? Are those people more or less susceptible to mass-formation hypnosis than you are?

With this sort of “simulated society” stored in some digital realm, inside an exascale computer with zettabytes of storage —one could model all sorts of potential future scenarios—political or otherwise—and try to forecast and predict how things will likely progress by studying the interactions of the “virtual clones” of a large number of people in advance of the real-world scenario.

If such a model were constructed, “they” would already know what the most probable outcome of some event might be.

It has been said recently that we all “live in a simulation”. The intent behind that statement is usually something completely different than what I’m getting at here; it comes from some radical ideas about theoretical physics, about whether the Universe is “real” or is the product of some “simulated game” run by an advanced intelligence.

But now you can start to imagine how “societal level simulations” might be possible. Another kind of “simulation” with troubling implications.

If I were thinking fancifully, I might come up with a clever name for something like this: A Looking Glass. A way to “forecast” the future by modeling every human on earth digitally, using the information they save in their phones, and predicting how they will behave.

Is this what they really do with all that data and raw supercomputing power at the NSA Utah facility? Who knows—but do you think it is in your best interests NOT to know what they are doing with exascale supercomputers and 5 zettabytes of storage capacity?

Do you really think that those who do these things—all around the world these days— act purely out of concern for your well-being or safety?

The political events of the past 5 years should completely obliterate that naive thinking.

Time for a Great Awakening.

updates and references:

China Just Achieved A "Brain Scale" AI Computer | ZeroHedge

Excellent article! Thank you so much for posting this. The statement "Through the looking glass" in Q post No.9 always intrigued me and I came to a similar conclusion a couple of years ago, though my theory focused more on the idea of a global mesh AI network that relied heavily on satellite technology. But essentially it's the same. I remember

So many anons were playing around with the possibility of time travel and quantum string theory in regard the "looking glass" statement in the post when it really had to do with quantum super computing, global data collection in real-time, and digitally predicting future events using global intel databases and artificial intelligence. When one considers the possibilities, the "looking glass" could truly be the most powerful weapon mankind will ever create.

I "get" what you're saying (or implying). Based on your info, I'm pretty sure I know how Q was able to map out every event well in advance. I arrived at that conclusion even before I hit your reference to "Looking Glass," super computers with the ability to forecast the future by modeling those suspected (known for) for treasonous crimes against humanity, and all manner of heinous activity. I recall reading some time ago about eight (8) super computers in existence worldwide, and that the US military runs two of them. So your article explains it. Thank you for sharing with us the benefit of your knowledge, without coming right out and telling us what the Looking Glass is and what are its capabilities. Brilliant!